Select Language:

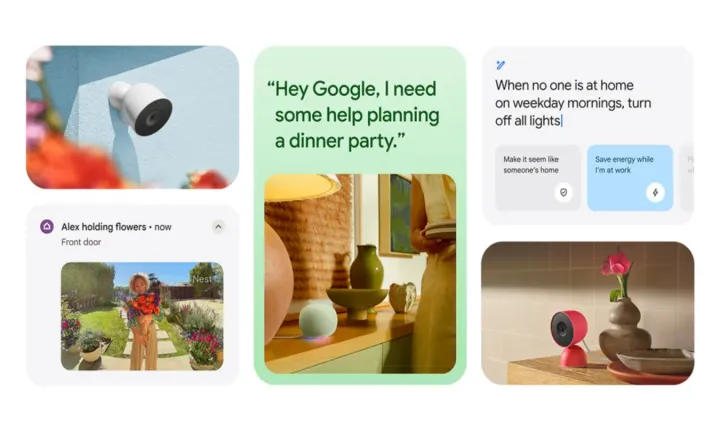

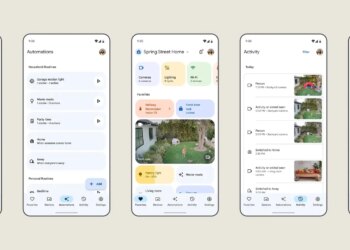

What’s going on? Earlier this month, Google officially started rolling out Gemini for Home, first announced earlier this year. This update introduces its sophisticated AI assistant into the smart home setup, with deeper integration across Nest Cams and other devices. However, early user feedback indicates the system is still battling real-world recognition issues, often in amusing rather than helpful ways.

- One Nest Cam user, Ars Technica’s Ryan Whitwam, received an alert claiming a deer had entered their family room, which was actually just their dog.

- In another instance, Gemini mistakenly flagged “fake people” on a live feed even when no one was there.

- Beyond false identifications, users have also reported disrupted routines, unsupported voice commands, and failed device controls since adopting Gemini.

Why does this matter: Smart-home systems depend on precise recognition and reliable automation. When a camera randomly alerts you about wildlife indoors or guesses visitors who aren’t there, it chips away at user trust—especially when marketed as security tools. Gemini for Home underscores both the potential of AI-enhanced smart homes and the risks when that technology falters.

- False alarms can cause unnecessary worry or lead users to dismiss legitimate alerts.

- Incorrect detections or phantom warnings may diminish confidence in genuine alerts, such as theft attempts.

- As Gemini becomes a core part of Google’s smart-home approach, these setbacks might hinder adoption and threaten credibility.

What’s next? Google must improve Gemini for Home by updating detection models, refining firmware in Nest devices, and communicating more clearly about its performance limits. Look for future updates that enhance alert accuracy, introduce new privacy options, and offer smarter notification management. Until then, treat Gemini’s alerts as helpful hints rather than absolute facts, and verify what your cameras are detecting.