Select Language:

Cloud-based AI chatbots like ChatGPT and Gemini are user-friendly, but they do have their drawbacks. Utilizing a local LLM—the technology that powers these chatbots—gives you more control, offline functionality, and enhanced data privacy. While the technology might seem intimidating, user-friendly applications make it accessible for everyone.

Ollama is an intuitive application that allows users to run local LLMs without needing technical knowledge. You can operate powerful AI models on standard hardware, such as a laptop. Ollama’s standout feature is its simplicity and the fact that no intricate setup is required.

It supports a range of models and is compatible with macOS, Windows, and Linux. So, regardless of your platform, Ollama has you covered. The installation is straightforward, and you can be up and running with LLMs in no time.

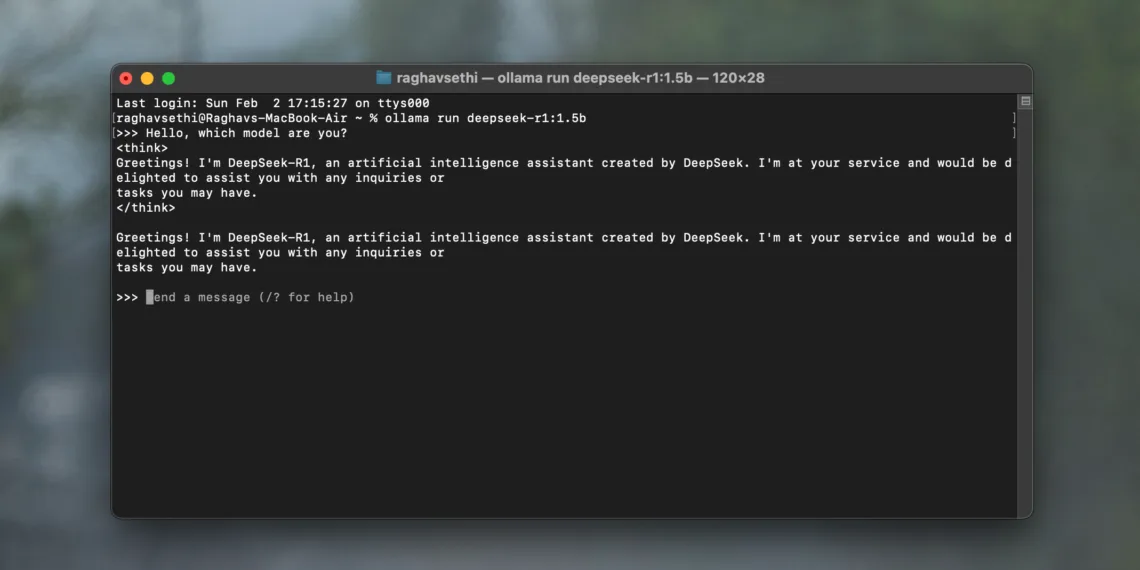

To launch a model, simply use the command ollama run [model identifier]. You can specify any of the supported LLMs. For instance, to run Microsoft’s Phi 4 model, use the following command:

ollama run phi4For Llama 4, use the command:

ollama run llama4The chosen model will download and start running, allowing you to interact with it directly from the command line. For example, you can run DeepSeek on a laptop using Ollama.

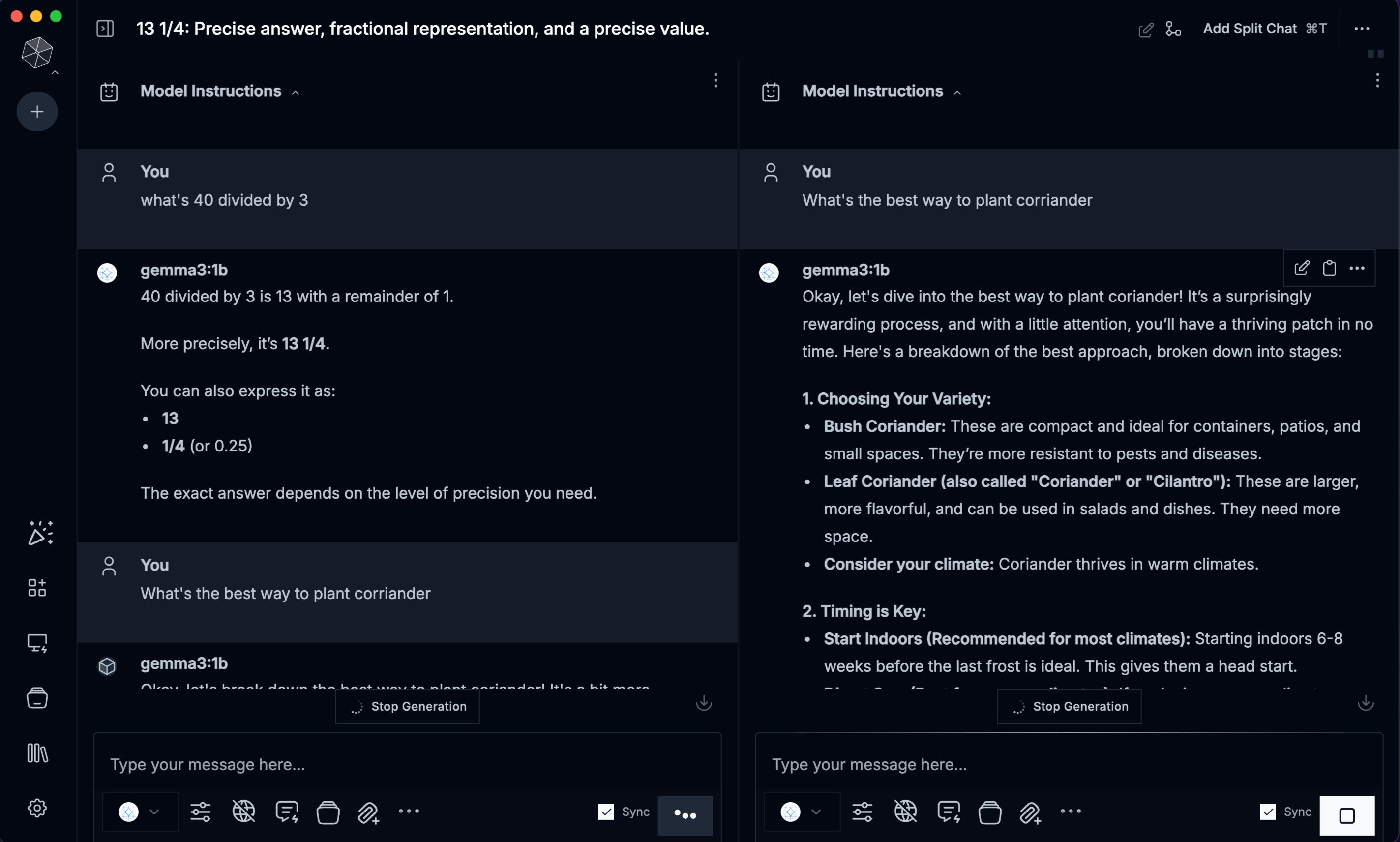

Similar to Ollama, Msty is a straightforward app that simplifies the process of running local LLMs. It is compatible with Windows, macOS, and Linux, removing the usual complexity associated with local LLMs, such as Docker configurations or command-line interfaces.

Msty supports a variety of models, including popular choices like Llama, DeepSeek, Mistral, and Gemma. You can also scout for models directly on Hugging Face, a great site for discovering new AI chatbots. Upon installation, the app automatically downloads a default model to your device.

After that, you can download any model from the available library. If you prefer to avoid the command line entirely, Msty is the right app for you. Its intuitive interface provides a smooth experience.

The app also comes with a library of prompts featuring several pre-made options to guide LLM models and refine responses. Additionally, it offers workspaces to keep your chats and tasks organized.

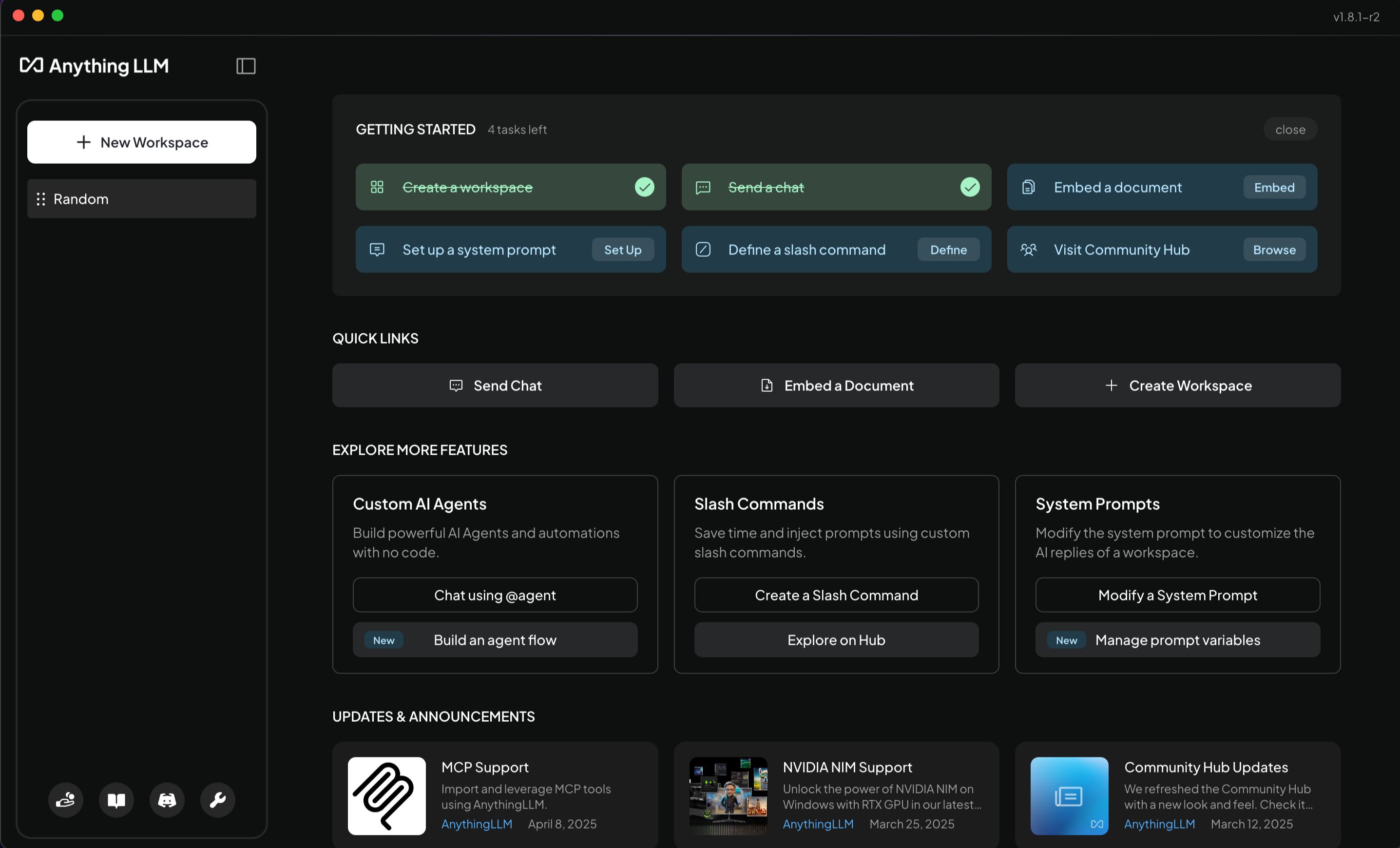

AnythingLLM is an excellent desktop application for users who want to run LLMs locally without complicated processes. From installation to your first prompt, everything is seamless and intuitive, akin to using a cloud-based LLM.

During the initial setup, you can choose from various models. Some top offline LLMs available for download include DeepSeek R1, Llama 4, Microsoft Phi 4, Phi 4 Mini, and Mistral.

Like most applications on this list, AnythingLLM is fully open-source. It has its own LLM provider as well as support for multiple third-party sources, including Ollama, LM Studio, and Local AI, allowing you to download and operate countless LLM models available online.

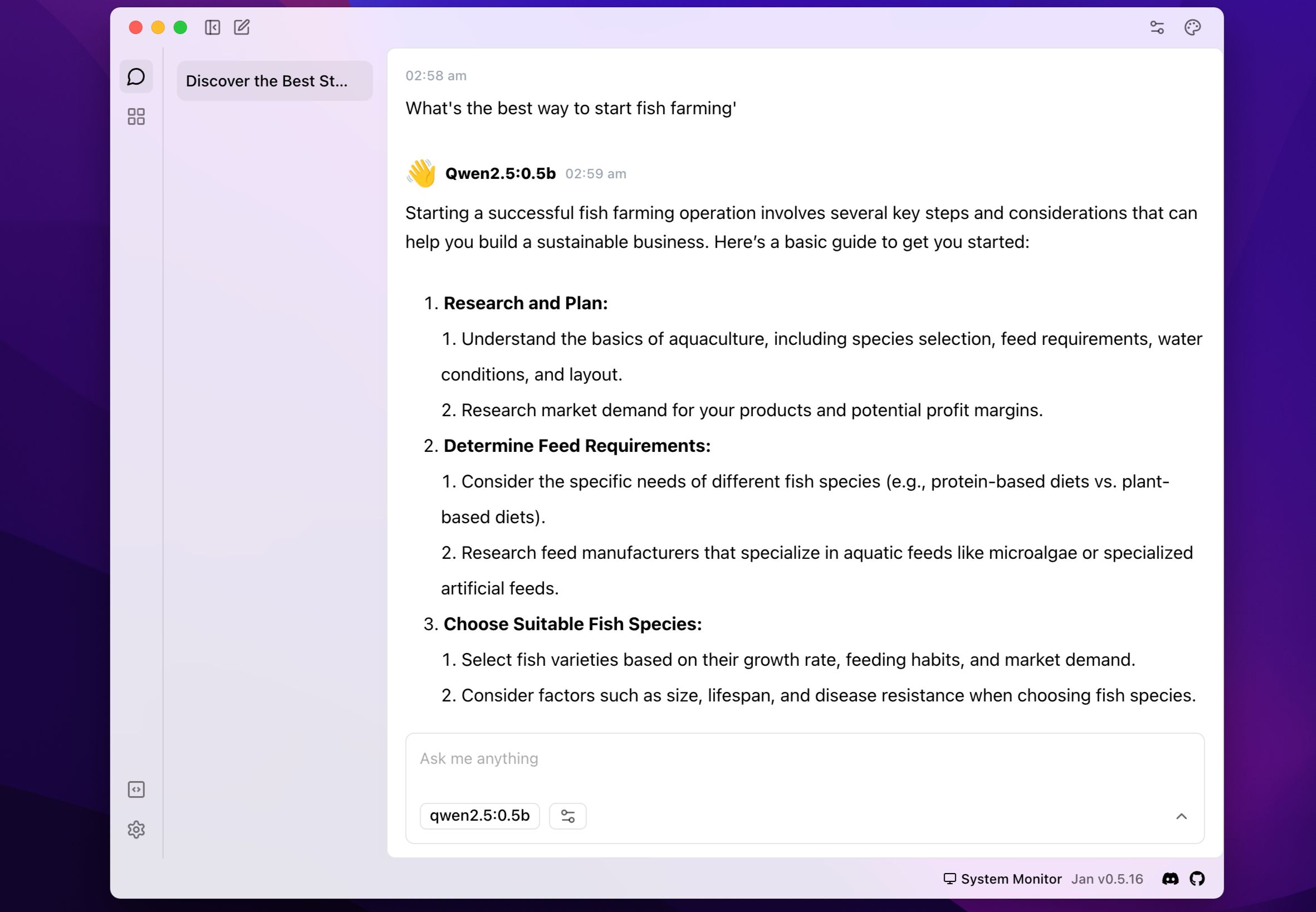

Jan positions itself as an open-source alternative to ChatGPT that works offline. It provides a sleek desktop application for running various LLM models locally. Getting started with Jan is simple. Once you install the app (available for Windows, macOS, and Linux), you can choose from several LLM models to download.

While only a few models are displayed by default, you can easily search for models or enter a Hugging Face URL if you’re looking for something specific. Additionally, you can import a model file (in GGUF format) if you have one on your device—it doesn’t get much simpler! The app also lists cloud-based LLMs, so make sure to use the appropriate filter to exclude them.

Related

Should You Use a Local LLM? 9 Pros and Cons

Using a local large language model isn’t for everyone, but there are some compelling reasons to give it a try.

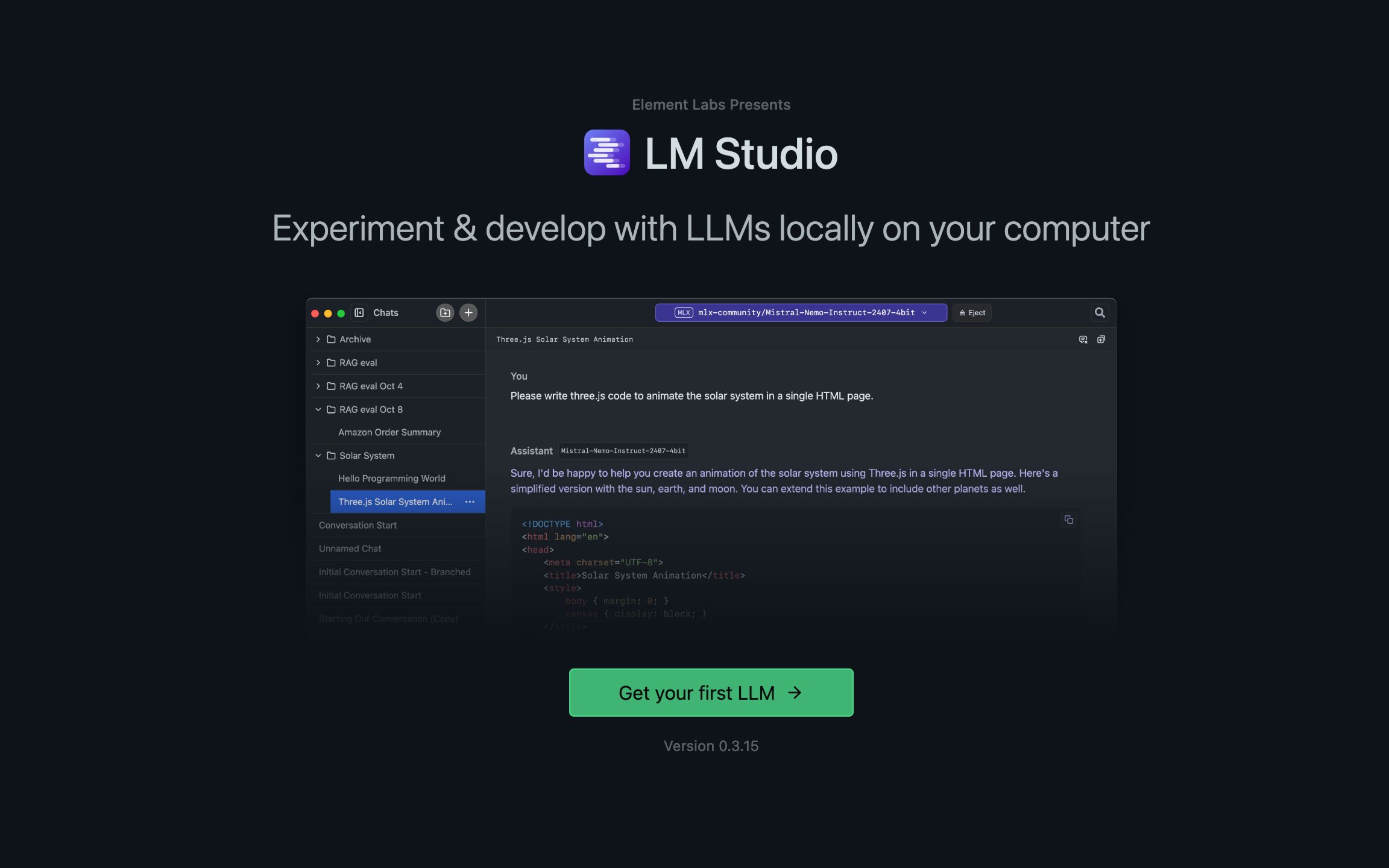

LM Studio is another application that offers one of the easiest ways to run local LLMs on your device. It provides a desktop application (available for macOS, Windows, and Linux) that allows you to run LLMs effortlessly.

Once you’ve set it up, you can easily browse and load popular models like Llama, Mistral, Gemma, DeepSeek, Phi, and Qwen from Hugging Face with just a few clicks. Everything runs offline, ensuring your prompts and conversations remain private on your device.

The app features an intuitive user interface that feels familiar, making it comfortable for anyone who has used cloud-based LLMs like Claude.

There are multiple ways to operate an LLM across various systems, but the applications listed here offer some of the most convenient methods. While some involve a bit of command line interaction, others, like AnythingLLM and Jan, allow you to manage everything through a graphical user interface (GUI).

Depending on your comfort level with technology, feel free to experiment with a few options and choose the one that best fits your needs.