Select Language:

The tool enables creators to connect with audiences worldwide by automatically dubbing and syncing videos across different languages.

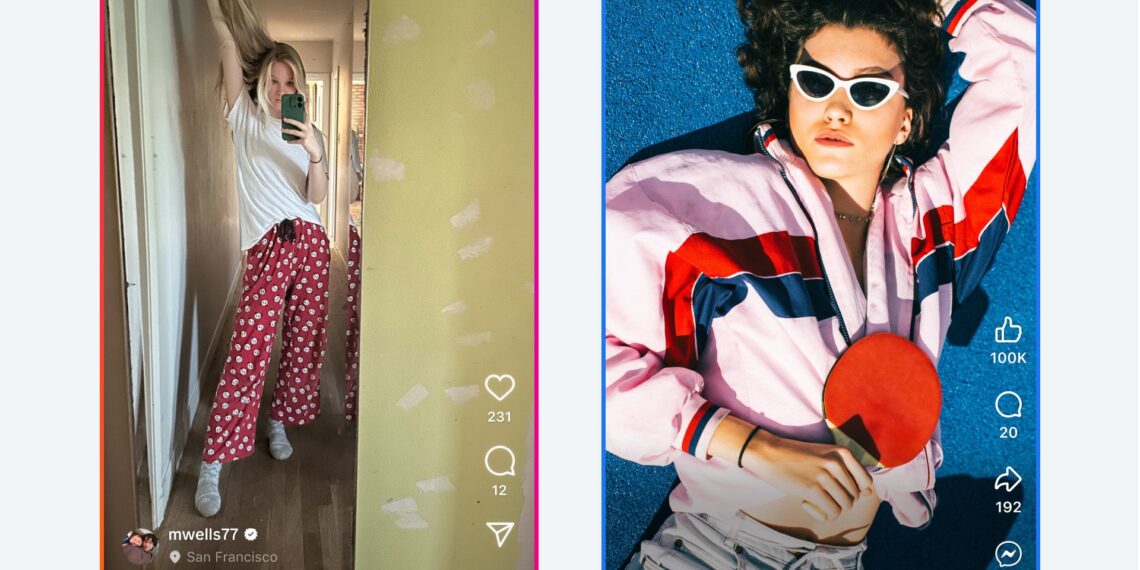

Meta, the parent company of Facebook and Instagram, has expanded its artificial intelligence features to enhance the accessibility of short-form videos across language barriers.

They have introduced upgraded AI-driven translation and lip-syncing for Reels, their popular short video format. These updates support a broader range of languages, including Bengali, Tamil, Telugu, Marathi, and Kannada, in addition to previously supported languages such as English, Hindi, Portuguese, and Spanish.

According to a blog post, the new update employs neural machine translation models along with on-device and cloud-based AI systems to generate dubbed audio and real-time captions. These can be automatically suggested to viewers when the Reel’s language differs from their device settings. This technology does more than just add subtitles; it recreates the creator’s voice in the translated language and matches their mouth movements, giving the impression that the creator is speaking the new language.

Meta stated that the aim of this feature is to promote cross-cultural interaction, allowing users to discover content “regardless of where it was created or what language it was filmed in.” Early feedback from creators indicates that the expanded language options have already increased the reach of their videos.

The translation and lip-sync features are available free of charge to all public Instagram accounts in regions where Meta AI is active, as well as to Facebook creators who have at least 1,000 followers.

Meta explained in their blog, “Many creators want to reach audiences worldwide, and their input helped shape our translation features. Translations using Meta AI are just one way we’re delivering the best content and exploring innovative methods to connect people globally.”

However, some critics caution about the difficulties in accurately conveying context, emotion, and cultural nuances through AI translations. There is also a risk of intentionally misrepresenting what a person says if the audio dubbing or translation is incorrect.