Select Language:

Meta announced on Monday that it will implement stricter measures against Facebook accounts that consistently share unoriginal content, including recycled text, images, and videos. This move is part of a broader initiative to uphold content integrity and support original creators on the platform.

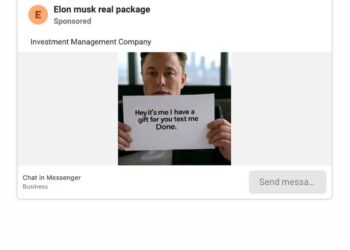

In a blog post on Meta’s website, the company shared that it has already removed around 10 million accounts this year for impersonating notable content creators. Additionally, they have taken action against about 500,000 profiles involved in spam tactics or creating fake engagement.

The corrective measures include decreasing the visibility of posts and comments as well as suspending access to Facebook’s monetization programs.

This update follows similar policy changes by YouTube, which has recently clarified its approach to mass-produced and repetitive videos, particularly those driven by generative AI. Meta emphasized that users who transform existing content through commentary, reactions, or trends will not be negatively impacted.

Instead, enforcement efforts will target accounts that merely repost content, either through spam networks or by pretending to be the original creator.

Accounts that repeatedly violate these standards will face consequences, including a ban from monetizing their content and reduced distribution of their posts across Facebook’s algorithm-driven feeds.

Meta is also testing a new feature that will include links to original sources in duplicate videos, ensuring that original creators receive appropriate credit.

This update comes at a time when social media platforms are increasingly inundated with low-quality, AI-generated content. While Meta did not specifically mention “AI slop”—a term for bland or poorly made AI content—the new guidelines seem to indirectly address it.

The announcement follows mounting frustration among creators regarding Facebook’s automated enforcement systems. A petition signed by nearly 30,000 users has called for improved human oversight and clearer appeal processes, highlighting widespread issues with unjust account suspensions, according to TechCrunch.

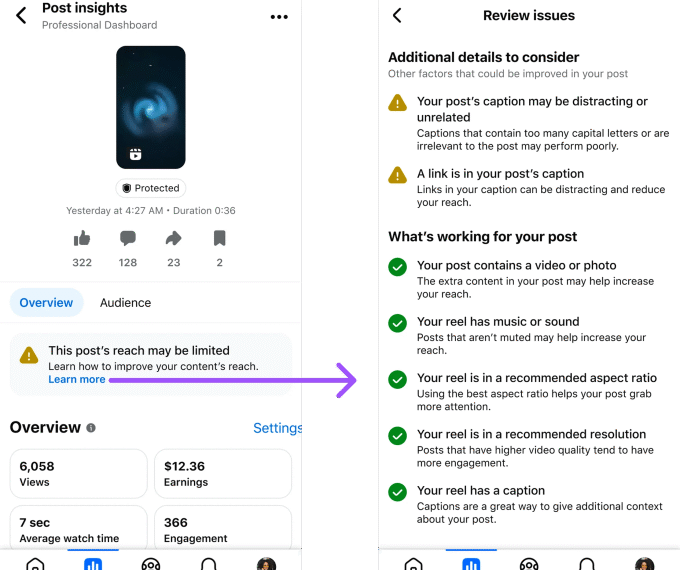

The new enforcement policies will be gradually implemented over the next few months, allowing creators time to adjust. Facebook’s Professional Dashboard now offers post-level insights to help users understand how their content is evaluated and assess any potential risks of demotion or monetization restrictions.

In its latest Transparency Report, Meta indicated that 3% of Facebook’s monthly active users globally are fake accounts, with a proactive approach taken against 1 billion such profiles in Q1 2025.

As Meta continues to refine its strategies, it is increasingly relying on community-based fact-checking in the US, employing a model akin to X’s Community Notes rather than relying solely on internal moderation teams.