Select Language:

Yes, AI-generated frames add input latency. This has been well established and even tested by prominent PC hardware YouTube channels at least two years ago. But the debate about frame generation has gone off into far tangents enough that it loses the core questions entirely.

For instance, actual users have been focused on “enhanced visuals with slight latency penalty” and “compromised settings with better latency.” That is quite the contrasting value proposition compared to just discussing frame gen versus equivalent native performance. What if we’ve been comparing frame generation to the wrong thing this whole time?

Reviewing Actual Statistical Data About Classic Frame Gen

Hardware Unboxed conducted extensive latency testing in late 2022, and the results cemented the default opinion about frame gen latency for the next few years. In Cyberpunk 2077, enabling DLSS 3 frame generation saw latency jump from 47ms with DLSS 2 to 63ms with frame generation enabled. You read that right. The latency actually jumped to 16ms despite DLSS 3 achieving higher frame rates.

Even worse, in their F1 2022 benchmarks, frame generation added 11ms of latency. This was comparatively explained as like getting a 170 FPS result, but with the actual gameplay having the latency and responsiveness as if it just 100 FPS. Still very playable, sure, but quantitatively significant.

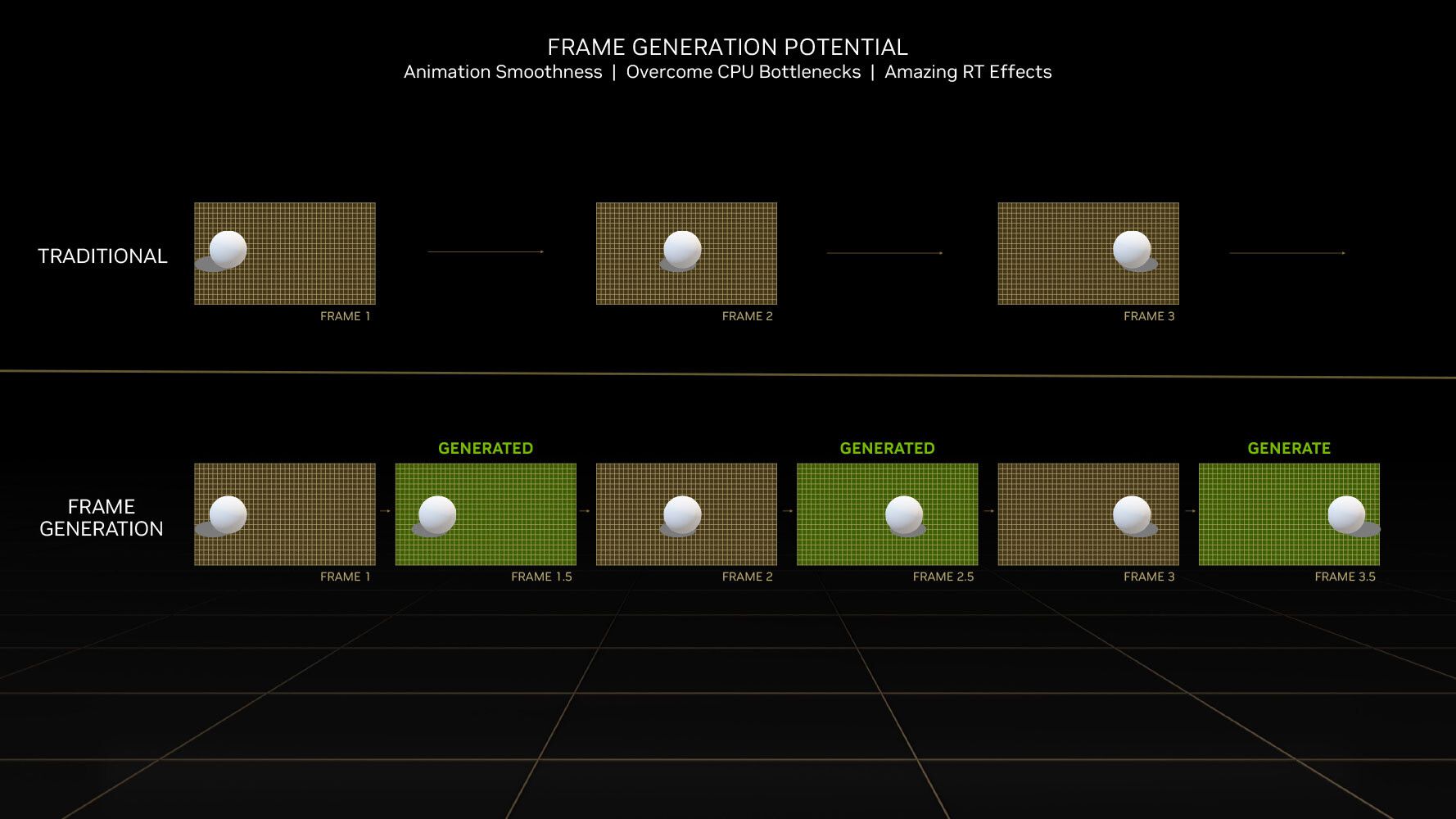

Digital Foundry also reached similar conclusions around the same time. Their testing showed DLSS 3 adding a 10ms penalty, explaining that it “inherently adds input latency due to the fact that it holds a frame” to generate the interpolated frame between two real ones. This technical explanation made perfect sense. Indeed, the GPU needs to look ahead to create that AI-generated frame, which then creates the inevitable delay.

Here’s how the measurements stacked up across different scenarios:

| Test Scenario | Without Frame Gen | With Frame Gen | Latency Penalty |

| Cyberpunk (HU) | 47ms @ 70FPS | 63ms @ 112FPS | +16ms |

| F1 22 (HU) | ~59ms @ 130FPS | ~70ms @ 170FPS | +11ms |

| Cyberpunk (DF) | 25ms | 35ms | +10ms |

Both outlets concluded that frame generation fundamentally trades latency for visual smoothness. The results created “a weird situation where frame rate increases, but latency increases as well. Usually, when gaming, higher NATIVE frame rates lead to lower latency, and therefore a more responsive experience.”

The fact that they even used similar games and arrived at the same conclusion clearly shows the baseline that frame generation = higher latency.

Is it Really an Issue of Latency?

That being said, the actual decision most people make when enabling frame generation would rarely, if it ever does, touch upon practical levels of latency. When someone fires up Cyberpunk 2077 and wants to experience path tracing, they’re not choosing between “112 FPS with frame gen” and “112 FPS native.” They’re choosing between “playable performance with all the eye candy enabled” and “turning off the features that make the game look incredible.”

The general consensus is that pushing for native is the default objective, and if you can’t, only then should you start clamoring for frame generation. An “emergency” solution for only when your GPU’s traditional rendering capability hits a wall.

For example, you want to play at native 4K with full ray tracing. Some people might soldier on with ultra settings even at 30-40 FPS, with relatively good latency. The “better” option would be to slap DLSS and frame generation to effectively double the FPS, even when the benchmark shows an increase in latency.

But in practice, the best thing to do is to just lower the settings or lower the resolution. Getting the best of both worlds with native 4K PLUS high frame rates (and thus caring about latency in this manner) is restricted to only a very small group of top-end enthusiast tier PC users.

This is where CPU bottlenecks also become particularly relevant. In CPU-limited games like Microsoft Flight Simulator, DLSS 3 cannot really provide much benefit. Thus, the argument for latency won’t be relevant on the GPU side for such limitations anyway.

Latency in Dumb, Everyday Life of Mundane Gaming

Besides, latency perception varies dramatically depending on your input method, even across multiple real-time action titles. Controller users on the PC, for example, would generally report less sensitivity to the latency penalty than mouse and keyboard users. When you’re playing something like Alan Wake 2 with a controller, that extra 10-15ms simply overlaps with the controller’s inherent latency and the game’s pacing. But fire up CS2 with a mouse, and you’ll feel every millisecond.

Furthermore, the settings context completely changes the equation. What is often seen constantly is people enabling frame generation specifically to keep all the goody-eye-candy that would otherwise be impossible if you force the GPU on a native resolution. They’re not trying to boost an already-decent frame rate. Instead, they’re trying to make an unplayable situation playable while keeping the visual features that make the game worth playing in the first place.

When Hogwarts Legacy launched with demanding ray tracing options, were people too focused on the “frame gen versus native” subject? No. At least not as much as people asking for settings and resolution tweaks that would provide the least compromise to their visual experience.

Even Avatar: Frontiers of Pandora with its heavy Ubisoft Snowdrop engine rendering pushes even high-end hardware to the limit. The game’s lush alien environments and complex lighting simply overwhelm traditional rendering. Starfield at launch became the poster child of unoptimized games, requiring upscaling just to maintain 60 FPS on flagship cards.

NVIDIA’s ACE for Games demo shows that today’s latency budget covers far more than rendered frames. ACE chains Riva speech recognition, a NeMo language model, and Audio2Face facial animation so an AI companion NPC can answer a player’s spoken question in real time. NVIDIA markets the pipeline as “low-latency on-device inference” for RTX 4000 series of graphics cards, but it hasn’t published exact round-trip figures, underscoring that even unmeasured milliseconds now belong to dialogue, not just graphics.

Commercial middleware draws the same trade-off. Inworld AI’s latest Voice 2.0 update reports roughly 200 ms to the first audio chunk when running its local text-to-speech pipeline, quick enough for fluid back-and-forth yet still a noticeable slice of a game’s total response window. Meanwhile, GPU features such as RTX Video Super Resolution borrow Tensor-core cycles, but reviewers didn’t note added GPU load but have not yet measured input-latency changes. Whether it’s an uncensored AI chat experience, video enhancement, or frame interpolation, every millisecond spent on AI extras comes out of the same latency allowance that competitive players track so closely.

The subject of latency is thrown away entirely in favor of the narrative that developers are increasingly designing around these AI assistance technologies. But… that is a tantalizing subject for another day.

Can Adjustments Easily Overcome Latency?

The timing of experience also matters more. Many groups of people adapt to frame generation latency remarkably quickly, so long as the visual payoff is significant enough for the trade. You might notice the slight sluggishness for the first few minutes, but when you’re getting smooth motion despite that path-traced lighting, your brain simply adjusts along with muscle memory conditioning.

There’s also the power efficiency angle that gets overlooked. Sounds pedantic when we are talking about latency use specifically, yes. But you see, frame generation often allows you to run lower GPU utilization while maintaining high frame rates. When you can hit your target performance with the GPU running at 70% instead of 100%, the minor setback of 16ms for an otherwise full single-player campaign becomes relatively worth it.

In addition, while DLSS 3 adds latency compared to DLSS 2, it still often beats native rendering without Reflex enabled, as confirmed multiple times by Hardware Unboxed. The catch is that most reviewers tested with Reflex enabled across the board, but plenty of people don’t use Reflex consistently.

And have we discussed the other adjustment concept of game pace affecting latency tolerance? For something fast and competitive, every millisecond matters. But in cinematic single-player games, which happen to be exactly the games that benefit most from ray tracing and frame generation, the latency penalty becomes much more acceptable. Well… except for those who somehow cannot really stand the quality of DLSS-generated frames.

We haven’t event touched upon refresh rates jumps, just relative improvements. How 30 FPS to 60 FPS still feels transformative despite the latency penalty, for one. But it feels like nitpicking at this point.

It Has Never Been a Static Goal Post

DLSS has evolved rapidly since its introduction. Remember how it absolutely sucked ass the first time for the RTX 20 series? Pepperidge Farm remembers. With the exception of DLSS-sensitive users, people who dismissed frame generation based on pre-DLSS 2 experiences often haven’t revisited it recently and often. Then again, maybe making it unnoticeable was the point in the first place?

In any case, even prior to DLSS 4, DLSS 3.5 and newer implementations feel noticeably more responsive than the launch version, though the obvious problem is that Frame Generation itself is locked on RTX 40 series GPUs. Early DLSS 3 games had inconsistent frame pacing and obvious visual artifacts. But newer implementations are much more refined, at least from a general gameplay point of view. Cyberpunk’s path tracing update, Alan Wake 2, and Indiana Jones and the Great Circle represent a completely different class of frame generation implementation than what was available at launch.

There’s also the hardware evolution angle. As the technology improved, the ideal objective of optimizing frame generation AI for lower end cards is getting closer and closer despite corporate resistance (of just offering them to near-halo or flagship models). VRAM usage when using DLSS, for instance, is now considerably lowered for later versions of the feature.

Even AMD’s competing solutions have also pushed Nvidia to improve their implementation. FSR 3 and AMD’s Fluid Motion Frames created competitive pressure that has benefited everyone, despite the fundamental differences in design and implementation measures.

The most interesting development for our discussion, of course, has been DLSS 4 and Multi Frame Generation. This is where Nvidia finally claims latency improvements alongside the performance gains. In reality, the addition of intermediate frames simply mitigates the latency issue, but let’s at least give it credit where it’s due.

What You Should Actually Worry About

After watching this technology evolve and seeing how people actually use it, we’re going to parrot the same practical argument that has been made for this subject: Enable frame generation for single-player action games.

These are always the games that you want to maintain high visual settings (especially ray tracing), and that would otherwise be unplayable if kept that way. Also keep in mind that your base frame rate should already be 60 FPS or higher at these settings, and that you are preferably using a controller.

But again, let me reiterate that the more important lesson here is how we evaluate the technology. Instead of internalizing benchmarks that are only noticeable when your RivaTuner UI is printed on the screen, maybe we should discuss trade-offs and use cases instead.

Because, honestly speaking, even if we take back the example of “170 FPS being played as if it is 100 FPS,” the playability is still there. That 100 FPS is still more than playable for most users. We are not looking at some excruciating level of latency where it should be called “lag” instead of latency. The biggest irony is that almost all competitive games aren’t even that resource-intensive in the first place. Well, maybe Fortnite, but not when compared to expansive action games as of late. Anything after the RTX 30 series or RX 6000 series with those games, and that FPS value shoots through the roof along with latency on it without even having to discuss frame generation AI in the first place.