Select Language:

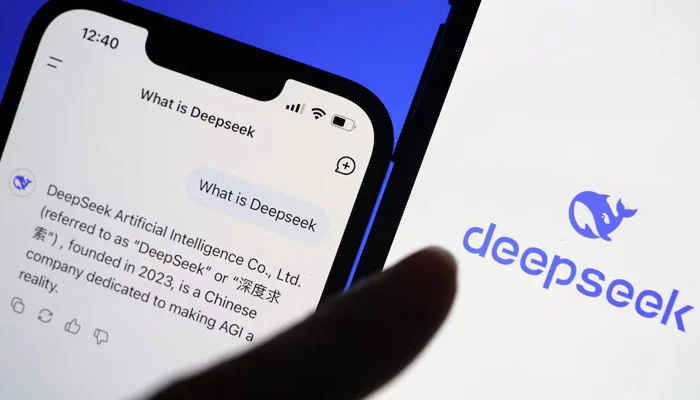

Chinese AI company DeepSeek has unveiled its latest “experimental” model, claiming it’s more efficient to train and better at handling lengthy text sequences compared to earlier versions of its large language models. Based in Hangzhou, the firm introduced DeepSeek-V3.2-Exp as an “intermediate step toward our next-generation architecture” via a post on the developer platform Hugging Face.

This new architecture is poised to be DeepSeek’s most significant release since the V3 and R1 models, which previously surprised Silicon Valley and international tech investors. The V3.2-Exp incorporates a feature called DeepSeek Sparse Attention, designed to reduce computing costs and enhance specific model functionalities. Additionally, DeepSeek announced on X (formerly Twitter) that it is lowering API prices by over 50%.

While the next-generation architecture isn’t expected to shake markets the way earlier iterations did in January, it could still pressure key competitors such as Alibaba’s Qwen and US-based OpenAI if DeepSeek replicates the success seen with R1 and V3. Achieving this would require demonstrating high performance at a fraction of the training costs that rivals spend.