Select Language:

For many years, chess has been considered a benchmark for testing how advanced artificial intelligence can become when competing against human intelligence. The milestone came in 1997 when IBM’s Deep Blue supercomputer defeated the reigning world chess champion, Garry Kasparov. This event was seen as a significant turning point, with The Wall Street Journal describing it as “a substantial setback for mankind.”

However, the outcome was not entirely one-sided. Just a month ago, Norwegian chess grandmaster Magnus Carlsen managed to beat ChatGPT in a game without losing a single piece. Interestingly, AI chatbots are also competing among themselves. Earlier this month, ChatGPT—powered by OpenAI’s GPT-3 reasoning model—defeated Grok, an AI chatbot created by Elon Musk’s xAI team, in a chess tournament.

But how does the average chess enthusiast stack up against AI bots? Many find it to be a frustrating experience. A common theme on chess forums is that bots tend to play “differently from humans.” When facing a powerful engine like Stockfish 16, which can evaluate over ten million positions every second, few players stand a real chance.

Some seasoned players argue that beating chess engines is easier because they follow predictable patterns, and that understanding how to survive an early assault can lead to victory. Nonetheless, AI bots don’t play by traditional chess rules—they rely on complex calculations and unconventional tactics. A researcher at Carnegie Mellon University recently introduced a different approach: designing an AI chess bot that plays more like a human.

Introducing Allie

The brainchild of Yiming Zhang, a PhD student at Carnegie Mellon University’s Language Technologies Institute, Allie aims to mimic human-style play. Zhang was inspired by Netflix’s popular series, “The Queen’s Gambit,” which sparked his interest in chess. However, he quickly became frustrated with existing AI bots, which tend to play unnaturally and rely heavily on brute-force calculations to win.

What sets Allie apart is its training process. It has been taught using 91 million transcripts of real human chess games. This extensive dataset allows it to emulate the thought processes, attacking strategies, and defensive maneuvers typical of average human players. According to a research paper, Allie even “ponders” during critical moments, much like a human would.

Test Your Skills Against Allie

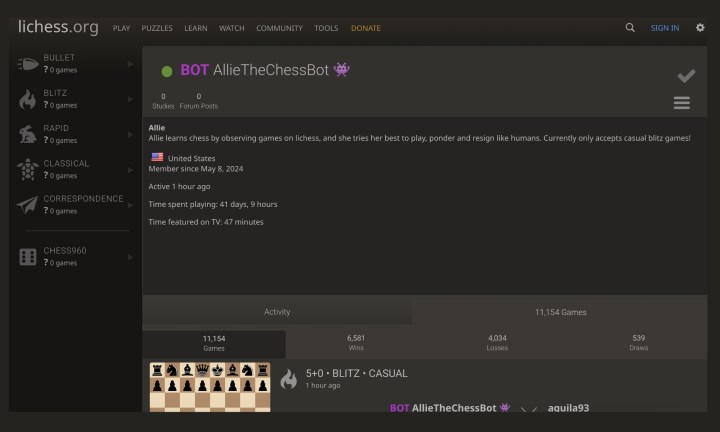

You can challenge Allie online through the platform Lichess, where it has already played over 11,500 games against human players. Out of these, it has secured more than 6,500 wins, lost just over 4,000, and about 500 games ended in a draw.

Despite its human-like thinking, Allie remains a formidable opponent. It can hold its own against everyone from casual players to experienced grandmasters. During tests against top-level players with a rating of 2500 Elo, Allie demonstrated the strength of a grandmaster, all while learning solely from human gameplay.

Since its public debut, Allie has gained popularity and is available for free. It currently only accepts invites for quick “Blitz” games. Additionally, those interested in understanding how a human-like AI makes its decisions can observe it competing against other players on Lichess. The project’s code is openly accessible on GitHub, allowing researchers and enthusiasts to build upon its framework.

Yiming Zhang created Allie after becoming interested in chess from watching “The Queen’s Gambit.” He observed that many existing AI programs play strangely, with tactics that are difficult for humans to understand. With Allie, he aimed to bridge that gap by developing an AI that thinks and moves more like a human player—an innovation that might reshape how we interact with chess engines in the future.