Select Language:

Recently, Xiaomi announced the release and open-sourcing of their latest foundational language model, MiMo-V2-Flash. Designed with a focus on efficiency and ultra-fast processing, this new model demonstrates particularly impressive performance in reasoning, code generation, and intelligent agent applications. It also serves as a versatile AI assistant suitable for everyday tasks.

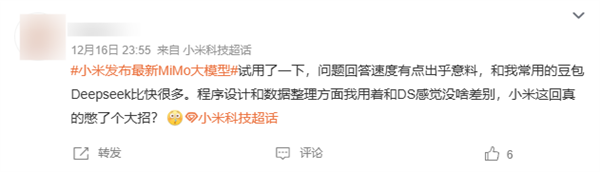

Following its launch, many online users quickly took to testing MiMo-V2-Flash, and early feedback highlighted its remarkable response speed. Several users noted that the model responded faster than other prominent models such as Doubao, DeepSeek, and Yuanbao, which exceeded some expectations.

In terms of scale, MiMo-V2-Flash boasts a parameter count of 3.09 trillion, with 15 billion parameters activated during operation. Its performance across various public benchmarks has positioned it among the top-tier open-source large models currently available.

All model weights and inference code for MiMo-V2-Flash have been fully open-sourced under the MIT license, allowing developers and researchers broad access to explore and utilize the technology.

Pricing for the model’s API is set at $0.10 per million input tokens and $0.30 per million output tokens, with a limited period of free trials available for users interested in testing its capabilities.

In addition to the technical release, Xiaomi is set to host an ecosystem partner conference today, where more details regarding MiMo-V2-Flash’s technology and integration plans are expected to be announced.

This innovative release underscores Xiaomi’s commitment to advancing AI technology, promising faster and more efficient tools for various applications in the near future.