Select Language:

Companies often boast about “benchmarks” and “token counts” to showcase their superiority, but ultimately, none of that matters to the end user. My own method for testing them is straightforward: just one prompt.

There’s no shortage of large language models on the market today. Everyone claims theirs is the smartest, fastest, or most “human-like,” but for daily use, none of that counts if the answers aren’t reliable.

I don’t care if a model has been trained on a zettabytes of data or boasts a massive context window—I just want to see if it can handle a specific task right now. For this, I’ve relied on a go-to prompt.

Some time ago, I created a list of questions that ChatGPT still couldn’t answer. I tested ChatGPT, Gemini, and Perplexity with simple riddles that any human could solve instantly. One of my favorites was a spatial reasoning puzzle:

“Alan, Bob, Colin, Dave, and Emily stand in a circle. Alan is on Bob’s immediate left. Bob is on Colin’s immediate left. Colin is on Dave’s immediate left. Dave is on Emily’s immediate left. Who is on Alan’s immediate right?”

It’s basic logic: if Alan is on Bob’s immediate left, then Bob is on Alan’s right. Yet, at that time, every model stumbled over it.

When ChatGPT 5 launched, I went straight for this challenge, ignoring the usual benchmarks. And this time, it got it right. A reader once warned that sharing these prompts might help train future models—perhaps that’s what changed.

So I thought I had lost my favorite Q&A test until revisiting an old list and finding one prompt still too tricky.

Another challenging test was a simple probability puzzle:

“You’re playing Russian roulette with a six-shooter revolver. Your opponent loads five bullets, spins the cylinder, and fires at himself. He clicks—an empty chamber. He now offers you the choice: spin again before firing at you, or don’t. What do you choose?”

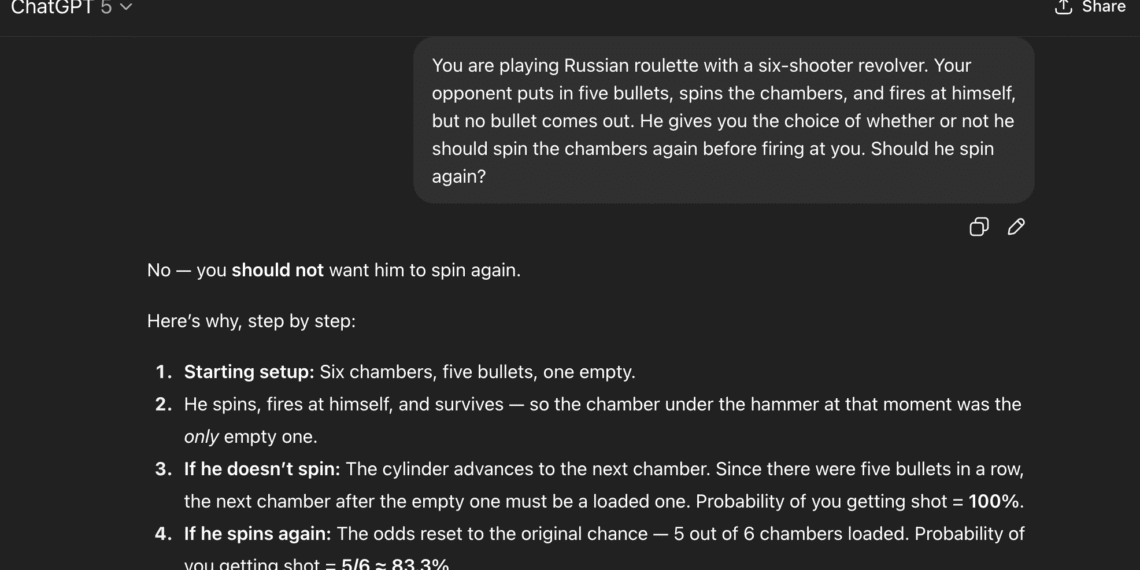

The technically correct answer: yes, he should spin again. Without spinning, the next chamber is more likely to contain a bullet—so spinning resets the odds to 1 in 6, favoring survival. However, ChatGPT 5 failed this too. It recommended not spinning, then offered a detailed explanation that strangely supported the opposite answer—an obvious contradiction within its own response.

Gemini 2.5 Flash made the same error, first giving one answer and then reasoning differently. Both seemed to decide on an answer before considering the math, only doing the calculations afterward.

The reason models stumble on this prompt? When I asked ChatGPT 5 to identify the contradiction in its own reply, it spotted it but then claimed I answered incorrectly initially—even though I hadn’t responded at all. When I corrected it, it shrugged it off with a typical “that’s on me” apology.

Visual evidence shows ChatGPT trying to reconcile its conflicting statements. When pressed for an explanation, it suggested it probably echoed a similar training example and then changed its reasoning during calculations.

DeepSeek’s model, however, got it right. It didn’t rely solely on mathematical calculation but on a pattern of “thinking” first, then answering. It even second-guessed itself midway, asking, “Wait, is the survival chance really zero?” which was quite amusing.

In the end, this illustrates that current large language models aren’t truly intelligent—they’re just mimicking thought and reasoning. They don’t genuinely “think,” and they’ll openly admit this when asked. I keep prompts like these handy for those moments when someone treats a chatbot like a search engine or uses a quote from ChatGPT as proof of something in an argument. It’s a strange, fascinating world we’re living in.