Select Language:

Shopping online is so simple. Sometimes, it feels too simple. You find a product, skim through the reviews, and suddenly, you’re placing an order. But not every glowing review you come across is written by a real person. Some are generated by AI, and distinguishing them isn’t always easy. Here’s what I watch out for.

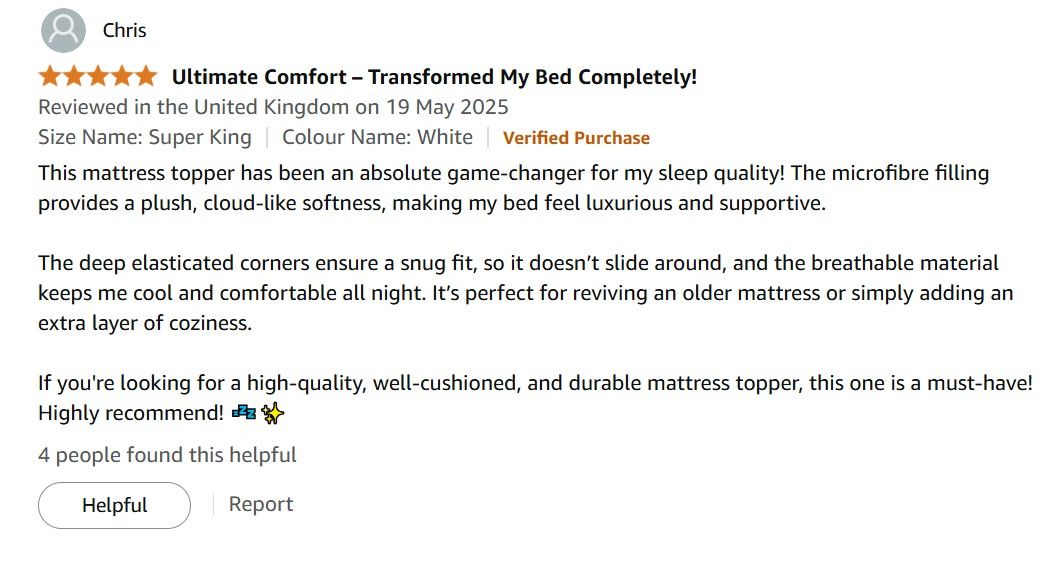

One of the simplest clues is in the writing style. AI-produced reviews tend to sound overly perfect, as if they’ve been carefully edited by grammar experts—each sentence flawless, punctuation impeccable, no typos in sight. That’s not really how most people write quick reviews.

When I leave a review myself, I usually do it on my phone, and my writing reflects that—sometimes slang, skipping minor words, maybe saying something like, “luv it!” or “meh, not bad.” If I’m being a bit more formal, it might look like, “Does the job. A little noisy, but it cleans quickly. Worth the price.” Not everyone’s reviews are this casual, but that variation connects to the next point.

AI reviews are often vague and generic. They’ll include statements like, “The craftsmanship exceeded my expectations at an unbeatable price,” or use tired phrases like “…total game-changer,” which tell me next to nothing and could describe anything from a frying pan to a sofa. Sometimes, they copy the product description word for word.

In contrast, genuine buyers usually give more specific, experience-based feedback. For example, “The front pocket fits my notebook, but I wish there was a side pouch for my water bottle,” or comment on the color like, “The navy blue looks darker in person.” They might mention quirks or inconveniences only someone who’s actually used the product would notice.

Another red flag is repetitive phrasing across multiple reviews. AI-generated comments often rely on the same stock phrases. If I see identical sentences or sentence structures in reviews under different names, my suspicion increases. It’s common for spammers to reuse the same blunt copy-and-paste blurb across various accounts.

If multiple reviewers all claim a blender is “perfect for making smoothies for two,” in the same language, I get skeptical — chances are, none of them actually tested it that way.

Review timing is also telling. If a product has been listed for months but suddenly receives twenty five-star reviews all in one week, I get suspicious. It’s easy for sellers to flood their pages with fake positive feedback, especially before a sale or to drown out earlier negative reviews. Most platforms let you sort reviews by date, making it simple to spot these bursts.

Photos can be revealing too. Many AI-generated reviews come with stock-looking images or pictures that don’t quite match the product. A quick reverse image search can show if the photo appears elsewhere online, indicating it’s not from a real customer. Authentic images tend to be slightly messy or taken with a phone, showing the product in a real setting, like on a kitchen counter or in someone’s hand.

For those who want extra confirmation, browser extensions like BitMind can help detect AI-generated images. Hovering over a product image reveals a probability score of whether it’s “Real” or AI-created, helping you avoid fakes.

While these tools aren’t perfect—they can sometimes flag genuine reviews or miss suspicious ones—they add a useful layer of security. I don’t rely solely on them, but I use them alongside my own instincts.

No single sign definitively uncovers a fake review, but when several of these warning signs appear together, I take a step back before making a purchase.