Select Language:

A recent report from the nonprofit AI research organization Epoch AI reveals that AI companies are facing challenges in extracting significant performance gains from inference models. Analysts warn that advancements in these models are likely to slow down within the next year.

The report, which is based on publicly available data and assumptions, highlights limitations in computational resources and rising research costs as key contributing factors. The AI industry has long relied on these models to enhance baseline performances, but this dependence is now under scrutiny.

According to Josh You, an analyst at Epoch, the rise of inference models can be attributed to their exceptional performance in specific tasks. For example, OpenAI’s o3 model has significantly improved mathematical and programming skills in recent months. However, these models require increased computational resources to address complex tasks, resulting in longer processing times compared to traditional models.

The training process for inference models typically begins with a large dataset to train a standard model, followed by the application of reinforcement learning techniques. This method acts like “feedback” for the model, helping it optimize its solutions to difficult problems. While this has facilitated rapid advancements in AI, it has also revealed potential bottlenecks.

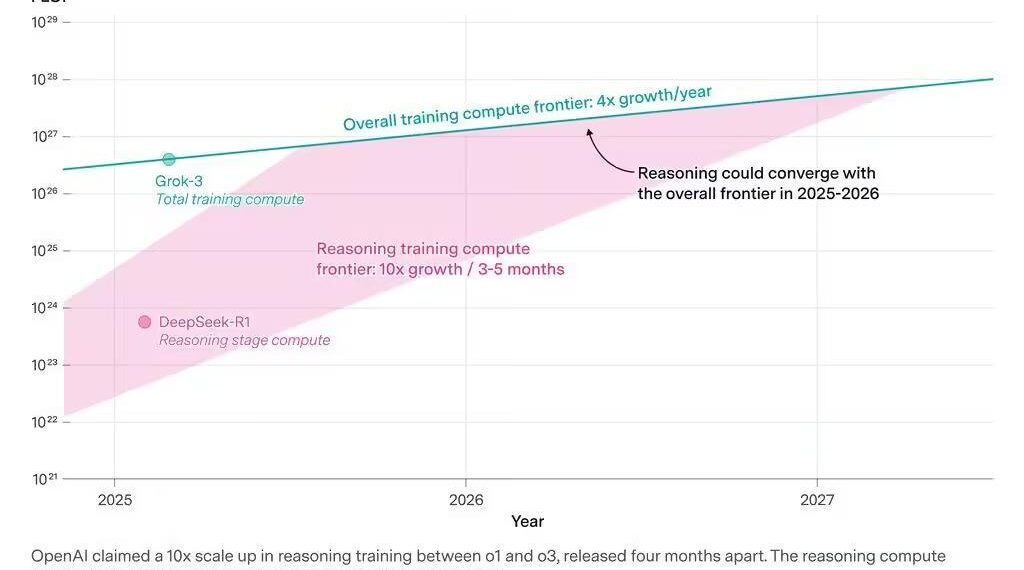

Leading AI laboratories, including OpenAI, are investing heavily in reinforcement learning. The company reports that training its o3 model required around ten times the computational resources of its predecessor, o1, most of which was allocated to the reinforcement learning phase. Researcher Dan Roberts noted that OpenAI’s future plans will prioritize reinforcement learning and allocate even more computational power than what was used for initial model training.

While this strategy accelerates model improvements, Epoch’s analysis serves as a reminder that these advancements are not limitless. Increasing computational resources may soon hit physical and economic constraints.

Josh You elaborated on the differences in performance growth. Standard AI model training currently sees performance doubling annually, while reinforcement learning performance is improving tenfold every three to five months. However, this rapid growth may align with the overall AI frontier advancements by 2026.

He emphasized that scaling inference models faces challenges beyond computational issues, including high research costs. “If research requires sustained high expenses, inference models may struggle to achieve their anticipated scale,” You concluded.