Select Language:

DeepSeek, a prominent name in the open-source AI community, announced the launch of its latest advanced large language model, DeepSeek-V3.2, along with a specialized enhancement called DeepSeek-V3.2-Speciale. Both the official web platform, mobile app, and API have already been updated to incorporate this new version.

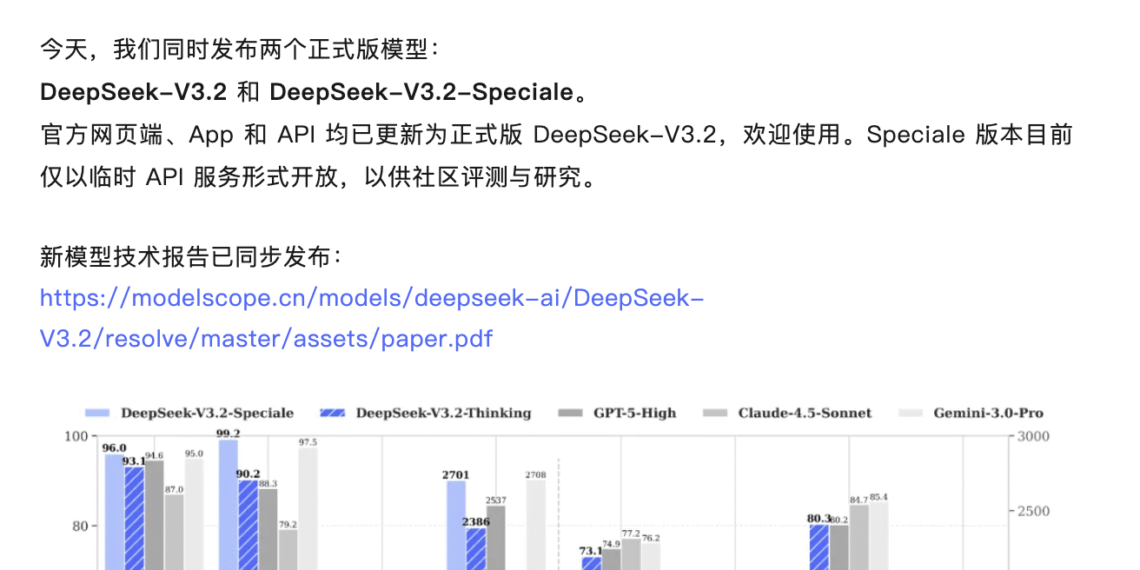

According to official data, DeepSeek-V3.2 demonstrates reasoning capabilities on par with GPT-5 in public benchmark tests, and it closely approaches the performance of Gemini-3.0-Pro. Notably, its output length has been significantly reduced compared to earlier models like Kimi-K2-Thinking, helping to lower computational costs. The Speciale variant takes a step further by integrating DeepSeek-Math-V2’s theorem-proving abilities, achieving remarkable success in international competitions such as the IMO, CMO, ICPC, and IOI. Impressively, in the ICPC, its performance was comparable to that of the second-best human competitors.

For the first time, the new release combines thinking modes with tool invocation, allowing the model to call external tools during reasoning processes. This was made possible through large-scale agent training data synthesis, involving over 8.5 million complex instructions across more than 1,800 environments. This approach has significantly enhanced the model’s generalization ability. According to official statements, DeepSeek-V3.2 has reached the highest level among current open-source models in agent evaluation, further narrowing the gap with proprietary models.

The experimental version, DeepSeek-V3.2-Exp, was released two months ago and received positive user feedback. It employed a DSA sparse attention mechanism that maintained stable performance across diverse scenarios. The Speciale version is currently accessible via a temporary API, enabling the community to conduct research and benchmarking.