Select Language:

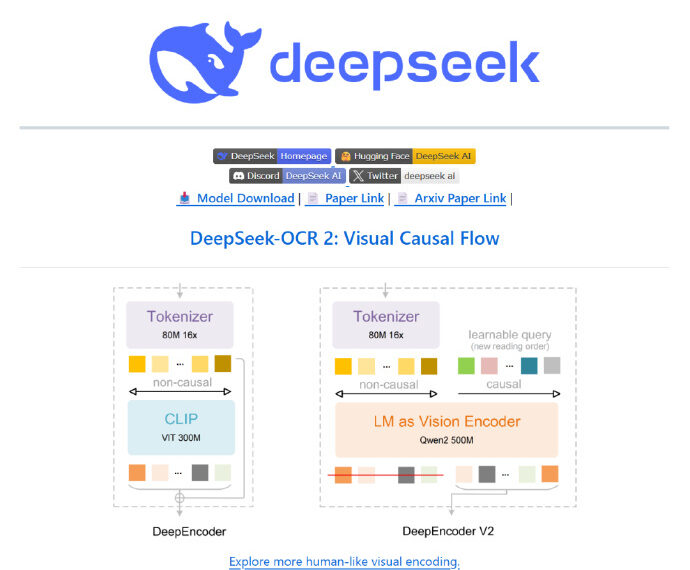

On January 27th, the DeepSeek team announced the release of their new research paper titled “DeepSeek-OCR 2: Visual Causal Flow,” along with the open-source availability of the DeepSeek-OCR 2 model. This innovative model introduces a novel encoding architecture called DeepEncoder V2, which dynamically adjusts the processing sequence of visual information based on the semantics of the image. Essentially, the model intelligently sorts visual content before performing text recognition, mimicking human reading patterns more closely.

Traditionally, visual language models have segmented images into multiple visual tokens, processing them in a fixed, grid-like order from the top-left to bottom-right. While straightforward, this approach doesn’t align well with how humans navigate complex documents, tables, or mathematical formulas—often jumping between elements based on semantic and logical relationships.

The DeepSeek research team emphasized that their breakthrough stems from rethinking the conventional treatment of visual data. Especially in scenarios involving complex layouts, visual elements often possess logical sequences and hierarchies. Relying solely on spatial order can limit a model’s understanding of the structural and semantic relationships within a document.

To validate their model’s effectiveness, the team conducted extensive testing on the OmniDocBench v1.5 benchmark, which includes a wide variety of Chinese and English documents such as academic papers, magazines, and reports. The benchmark assesses capabilities like text recognition, formula parsing, table reconstruction, and reading order comprehension.

Results from these evaluations are promising. When working with lower visual token limits, DeepSeek-OCR 2 achieved an overall accuracy score of 91.09%, representing a 3.73% improvement over its predecessor. Notably, in reading order accuracy, the model significantly reduced the editing distance from 0.085 to 0.057, indicating a more precise understanding of document structures and logic.