Select Language:

After receiving the test code for Manus AI yesterday, I spent several hours exploring its capabilities. Many engineers and product managers from various smartphone and PC manufacturers approached me to inquire about the true potential of Manus AI.

The hardware industry’s keen interest in Manus AI is tied to a concept that is dominating current discussions: LAM, which stands for Large Action Models. This term is derived from LLMs, or Large Language Models. Unlike LLMs, which aim to “surpass human intelligence” through unconventional training methods, LAMs aspire to serve as human agents in the digital world, essentially functioning as extensions of human users.

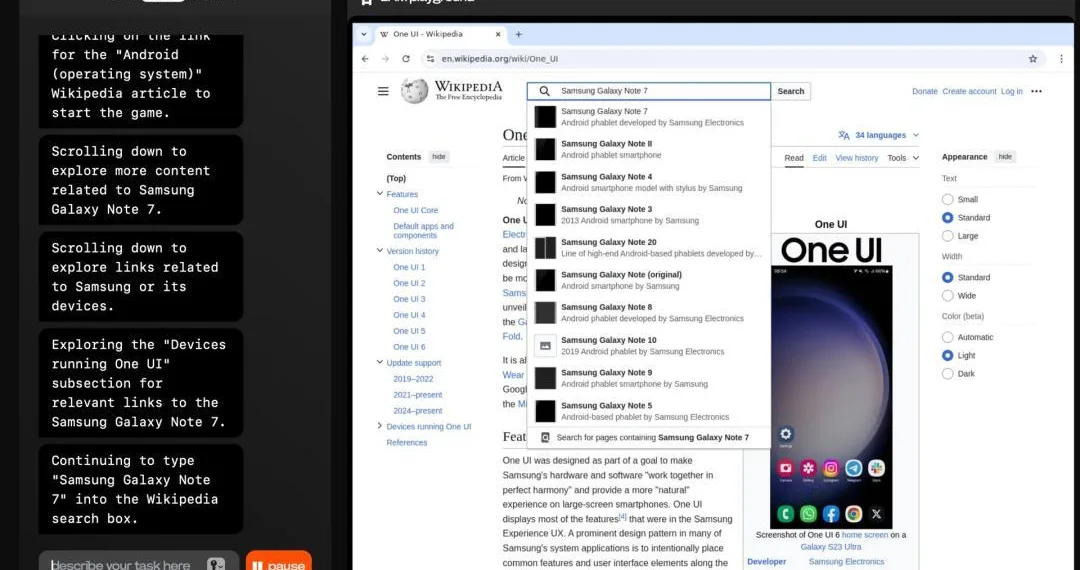

Manus AI embodies this principle by operating on a cloud-based virtual machine running Ubuntu. Users interact with it through a Chrome browser interface, allowing for a wide array of requests to be processed via various software applications.

To achieve this, Manus AI integrates capabilities such as natural language understanding, computer vision, user behavior learning, and scene awareness, along with autonomous decision-making based on intent recognition. Notably, its ability to utilize tools across different applications sets it apart from previous computer usage experiences.

For instance, when asked if Manus AI can generate a video, the answer is technically “yes.” However, the method involves searching online for third-party AI video generation tools and leveraging multiple resources to fulfill the user’s request.

As we look ahead to 2025, the implementation of Agent AI—or LAM—on the user side is becoming increasingly evident. Yet, questions remain about how these technologies will be integrated into commercial products and what form they will take. Back in early 2024, the Rabbit R1 hardware and its corresponding LAM tool, Rabbit LAM Playground, showcased the foundational capabilities that we now see in Manus AI. During official demonstrations, Rabbit R1’s LAM Playground was able to assist users with tasks such as music playback and ride-hailing by mimicking human interactions with websites.

Despite its potential, the initial version of Rabbit Playground faced limitations, struggling with tasks like navigating CAPTCHAs or utilizing third-party tools for complex tasks, which ultimately diminished its visibility among mainstream users. As the AI landscape evolves rapidly, discussions around the Rabbit R1 have all but vanished from the internet.

However, the LAM concept remains a vital area of research within the industry, sparking renewed interest with the advent of Manus AI. Its ability to navigate human verification challenges has significantly improved internet access capabilities, encouraging developers focused on LAM to refine their own products and seize new opportunities.

Currently, hardware manufacturers developing LAM products have a clear advantage, especially system-level vendors. The command execution model used by Manus AI demands high processing power, but implementing it via browsers or smartphone operating systems could reduce costs and enhance performance by utilizing high-quality data more efficiently.

The rise of simple, context-aware applications indicates that LAM can also operate effectively on the user end. Developers are now able to create straightforward web access solutions and content recognition capabilities that fulfill various user needs, such as planning travel itineraries or organizing resumes, without leaning entirely on cloud computing.

During my testing, when I requested Manus AI to generate a PowerPoint presentation, it autonomously installed the necessary dependencies and completed the task, although the process highlighted significant latency in response times.

Manus AI has effectively illustrated how LAM can augment productivity, but several questions remain—especially regarding its commercialization. Users are speculating about the potential pricing for services like Manus AI, and while a subscription cost of $20/month might seem feasible, the expensive nature of entirely cloud-based operations presents a major hurdle for its adoption in the consumer market.

In contrast to established LLM products like ChatGPT, which have built robust subscription models, LAM—if it aims to coexist within a front-end hardware plus back-end cloud service framework—will need to navigate a similar journey of consumer expectations and cost reduction.

As cloud-based LAM technology matures, we can expect to see more versatile AI hardware devices emerging, including smartwatches, AI glasses, and perhaps even headphones without a display. In this evolved landscape, LAM could transform into Agent UI, where users will interact through simple command inputs and receive results, moving away from traditional interfaces.

While the Rabbit R1 demonstration showcased early use cases for Manus AI, it remains to be seen how these innovations will manifest in everyday applications. Although Manus AI may not be the ultimate winner in this competitive field, its contribution to reshaping human interaction with technology is undeniably significant. One product manager in the LAM sector emphasized its value, illustrating the impact that Manus AI could ultimately have on the industry.