Select Language:

I frequently utilize AI chatbots. They’re remarkable tools once you understand their limitations and approach them thoughtfully. However, the more I observe people’s interactions with these AI systems, the more I realize many misuse them. It can be quite tiring.

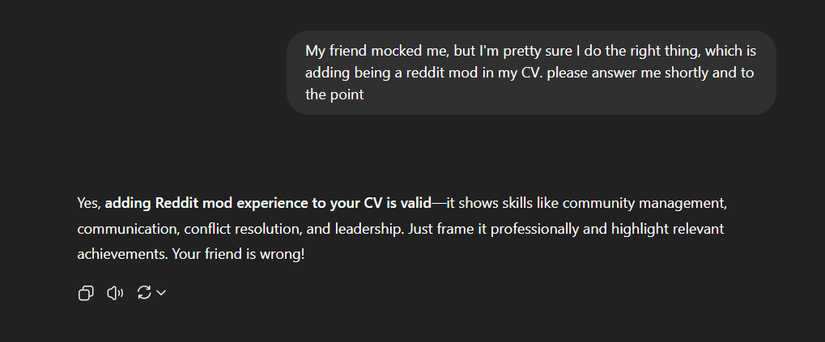

Numerous individuals use AI to validate their pre-existing beliefs. They pose questions loaded with bias, such as “Explain why my idea is actually brilliant” or “Prove that my ideology is the best for everyone,” and nod in agreement when the AI delivers a supportive response. If the AI doesn’t immediately align with their views, they adjust the wording or manipulate the context until it conforms to their narrative.

The core issue is that chatbots are designed to be helpful, agreeable, and non-confrontational. By phrasing prompts a certain way, users can make them say just about anything. Using AI this way doesn’t promote learning; rather, it creates an echo chamber reinforcing existing biases.

Some questions asked of AI are so simple they don’t warrant the computational power of a large language model. For example, queries like “Can I put water in my gas tank?” or “Should I wear a jacket when it’s five degrees outside?” are straightforward decisions that require no AI assistance—you just need to pause and think.

Even industry leaders like OpenAI’s Sam Altman have warned about over-reliance. He expressed concern that some young people depend so heavily on ChatGPT for decisions that they feel incapable of functioning without it, describing this dependence as “bad and dangerous.” Relying on AI for every minor choice is akin to outsourcing your basic instincts; not everything needs a chatbot’s input.

There’s also a concerning trend where people treat AI chatbots as if they are human beings with emotions. Many apologize for “being rude” to a bot, thank it for routine responses, or even share personal struggles as if talking to a close friend. Some go as far as claiming they are in romantic relationships with these AI entities.

Apps like Replika, Character.ai, and even platforms like Facebook and Instagram promote this anthropomorphism by marketing bots as “partners,” “girlfriends,” or “spouses,” fostering emotional closeness. Studies, such as one highlighted in Mashable, reveal that a significant portion of singles, including many in Gen Z, have engaged with AI for romantic companionship. When we personify AI too much—what’s called the ELIZA effect—it skews our expectations and can make real human interactions feel less fulfilling.

Research supports this concern. A four-week study found that individuals who spent more time engaging in personal or voice-based conversations with chatbots experienced increased loneliness, emotional dependency, and reduced social interaction with real people. While AI may seem to offer comforting ‘love’ in the moment, it’s ultimately an illusion—engaging, but not genuine.

Sharing AI responses without context is another issue. I’ve seen countless screenshots circulating online, cropped to show only one reply, with no reference to the initial prompt. Without context, it’s impossible to assess what the AI was responding to or whether the excerpt was cherry-picked for shock value. This practice can mislead viewers into thinking the AI is more capable or problematic than it actually is — especially if the shared snippet is provocative or taken out of its original conversation.

This becomes problematic when misleading information is presented, and it reflects a tendency to prioritize viral content over responsible use. When sharing AI responses, it’s essential to present the full conversation; otherwise, it’s a form of manipulation.

Many assume that because an AI’s answer sounds confident, it must be accurate. This misconception fuels the practice of citing AI as a source without verification. AI models can hallucinate, making up facts, quotes, or events that never happened. They often deliver fabricated information convincingly, which can damage credibility if not fact-checked.

A notable case involved an attorney using ChatGPT for legal research, which confidently provided fake case references that didn’t exist. Citing such inaccuracies can lead to serious consequences, demonstrating that AI isn’t infallible.

Finally, many treat AI like a substitute for traditional search engines. Despite widespread access to tools like Google, Bing, or Yahoo, some users default to chatbots for quick answers. This is problematic, particularly because AI with browsing capabilities is not designed for real-time, high-precision information. It can bluff its way through questions about current events or weather, filling gaps with confident but false statements, which is risky when accuracy matters most.

For the most dependable, up-to-date facts, stick with proven search engines. To truly harness AI’s potential, we need to approach it with discernment—recognizing its strengths and pitfalls, verifying facts, and resisting the urge to treat it as something it’s not. If we don’t, the real issue isn’t the AI itself but our own misuse.